Issue #197 : Software Testing Notes

Building Reliability in AI-Generated Tests

Hello there! 👋

Welcome to the 197th edition of Software Testing Notes, a weekly newsletter featuring must-read content on Software Testing. I hope this week has been good for you so far.

This week, we will explore:

What if Your Test Data Could Tell Your Framework HOW to Run?

Test as Transformation – AI, Risk, and the Business of Reality

Iterate Dynamic Values in JMeter Using Extractors and Controllers

The Most Important QA Metrics That Actually Improve Team Performance

Mastering Cypress Network Requests & API Testing: A Complete Guide

and more…

✨ Featured

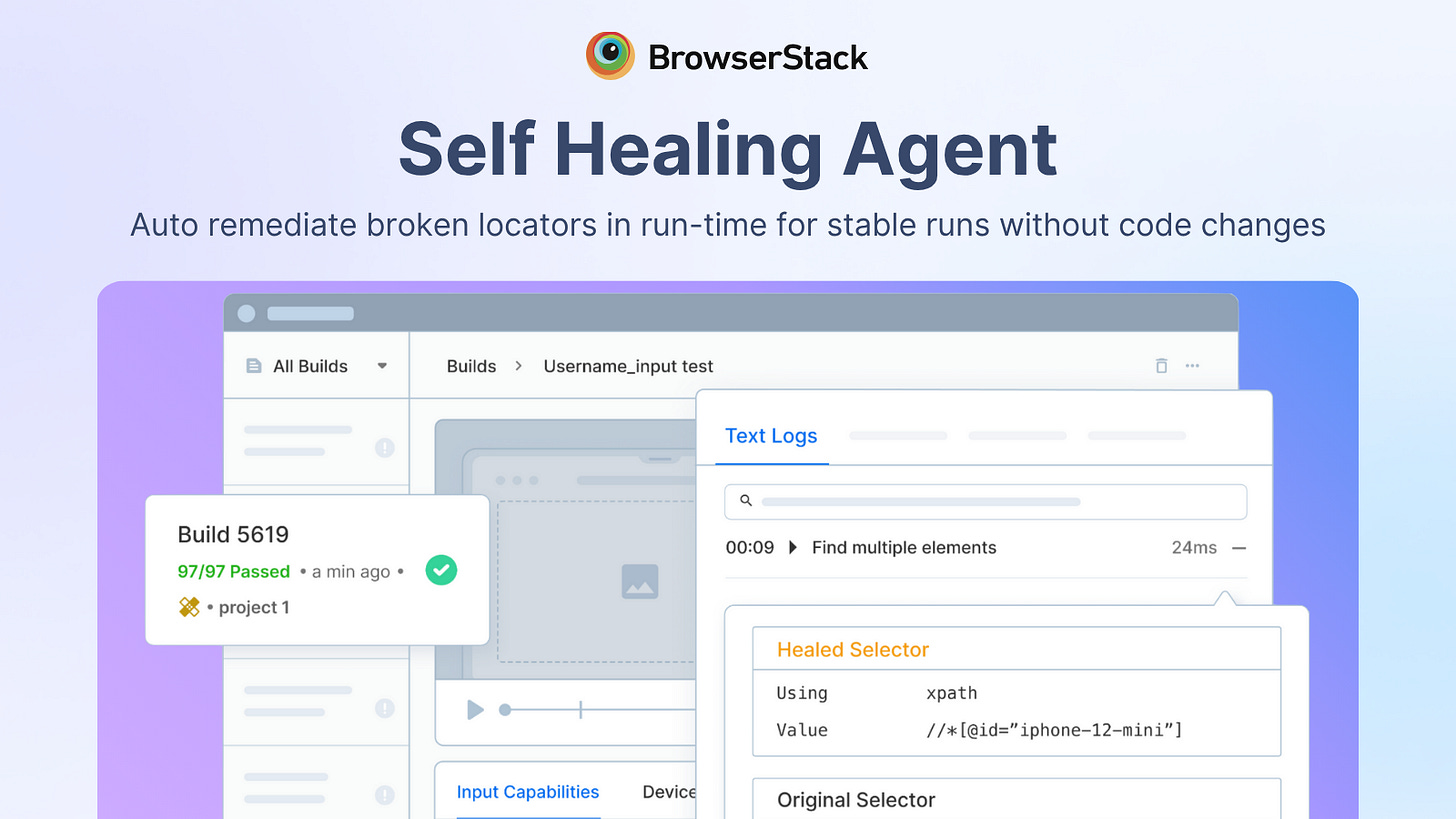

How to Drop Automation Build Failures by 40%

Some teams spend nearly 50% of QA time maintaining scripts. BrowserStack’s new Self-Healing Agent remediates broken locators at runtime, keeps pipelines green instantly, and auto-proposes permanent code updates for later. Compatible with Selenium, Playwright, and Appium. See how you can drop build failures by 40% here!

📚 Testing

Test as Transformation – AI, Risk, and the Business of Reality by Keith Klain

Keith Klain shares interesting insights into how testing should evolve as AI becomes norm in Software Products. Keith argues that testing is no longer just about finding bugs, but about protecting businesses from legal trouble, unfair AI decisions, and hidden risks that automation can’t see.

How to Deal with Stressful Deadlines as a QA by Higor Mesquita

This piece is a calm, experience-backed look at handling QA under deadline pressure. If you’ve ever felt that end-of-sprint squeeze, you’ll find Higor Mesquita’s practical guidance on prioritising risk, using automation wisely, and making peace with the realities of shipping software.

The Most Important QA Metrics That Actually Improve Team Performance by Marina Jordão

Marina Jordão talks about how the right QA metrics can sharpen priorities, reduce risk, and spark better conversations across the team.

How QA Teams Validate Highly Specialised AI (Without Becoming Scientists Overnight) by Katrina Collins

This is a thoughtful deep dive by Katrina Collins into how QA teams can confidently test AI systems in specialist domains without pretending to be experts, using a clear split between what QA owns and where subject matter experts must step in.

🔍 Software Testing

⚙️ Automation

Building Reliability in AI-Generated Tests by Pravesh Ramachandran

AI-generated tests feel impressively fast but can become quietly unreliable very quickly too. Pravesh Ramachandran argues that speed is meaningless without trust in what those tests actually cover and provides a walkthrough of a practical framework to make AI testing usable in the real world.

Mastering Cypress Network Requests & API Testing: A Complete Guide by Anuradha Liyanage

Want to lean how to do API testing with Cypress? Take a look at this guide by Anuradha Liyanage sharing practical examples of intercepting, mocking, and validating network calls with Cypress.

How AI Can Tell You Why Your Tests Failed (And How to Fix Them)

This piece explores a neat way to take the pain out of CI failures by letting AI read long, noisy test logs and turn them into clear, human-friendly explanations with suggested fixes. It’s a practical walkthrough of wiring that into a CI pipeline, with the quiet observation that most “mysterious” test failures are simple once someone (or something) actually reads the logs for you.

I Built Selenium Self-Healing Tests with AI That Fix Themselves (Here’s How)

This article walks through a clever, hands-on approach to self-healing Selenium tests, using local AI to automatically fix broken locators when the UI changes.

What if Your Test Data Could Tell Your Framework HOW to Run, Not Just WHAT to Test? by Sachin Koirala

Sachin Koirala introduces a smart twist on data-driven testing, showing how test data can control execution behavior itself, not just inputs.

🔍 Test Automation

💨 Performance

How to Iterate Dynamic Values in JMeter Using Regex/JSON Extractor and While Controller

This article walks through a neat way to loop over dynamically extracted values in JMeter, using a While Controller and a couple of built-in functions to handle responses.

🔍 Performance Testing

🌞 Accessibility

5 reasons why WCAG AA compliance does not mean your website is accessible by Craig Abbott

How often do testers stop at “passes WCAG” instead of asking “is this actually usable? Craig Abbott gives concrete, real-world examples where something can technically pass audits yet still be frustrating or unusable for real users.

🔍 Accessibility Testing

🛠️ Resources & Tools

playwright-smart-reporter — An intelligent Playwright HTML reporter with AI-powered failure analysis, flakiness detection, and performance regression alerts.

Dev Browser — A browser automation plugin for Claude Code that lets Claude control your web browser to close the loop on your development workflows.

react-native-bugbubble — Monitor network requests, WebSocket events, console logs, and analytics events in real-time with a beautiful, draggable UI.

📝 List of Software Testers

Do you create content around Software Testing ? Submit yours blog details here and I will add it to the list.

🎁 Bonus Content

📌 OTHER INTERESTING STUFF

⭐ LAST WEEK’S MOST READ

Anti-Patterns in Playwright People Don’t Realize They’re Doing by Gunashekar R

The Complete Guide to RAG Quality Assurance: Metrics, Testing, and Automation by Irfan Mujagić

How to Build a QA Team Engineers Actually Want to Work With by David Ingraham

😂 And Finally,

📨 Send Me Your Articles, Tutorials, Tools!

Wrote something? Send links via Direct Message on Twitter @thetestingkit (details here). If you have any suggestions for improvement or corrections, feel free to reply to this email.

Thanks to everyone for subscribing and reading!

Happy Testing!

Pritesh(@priteshusdadiya)

Great curation on the self-healing test frameworks. The Pravesh piece on AI-generated test reliability nails something critical: velocity without validation breeds false confidence, not actual coverage. Been seeing teams ship AI-gen'd E2E suites that pass greenon dashboards but miss basic regression scenarios because the model over-indexed on happy paths during generation. The delta between "test exists" and "test validates intended behavior" is where mostAI automation strategies collapse, especially when dealing with edge cases that weren't well represented in training examples.